MLflow 的用法

Overview

MLflow是一个用于管理机器学习全生命周期的框架。

其主要的作用是:

- 完成训练和测试过程中不同超参数的结果的记录、对比和可视化——

MLflow Tracking - 以一种可复现重用的方式包装 ML 代码——

MLflow Projects - 简化模型部署的难度——

MLflow Models - 提供中心化的模型存储来管理全生命周期——

MLflow Model Registry

现在主要用到的是第三个,所以先记录Models的用法

MLflow Models

MLflow Models本质上是一种格式,用来将机器学习模型包装好之后为下游的工具所用。

这种格式定义了一种惯例来让我们以不同的flavor保存模型进而可以被下游工具所理解。

存储格式

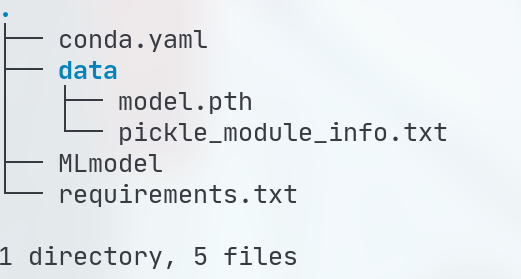

每个MLflow Model是一个包含任意文件的目录,根目录之下有一个MLmodel文件,用于定义多个flavor。

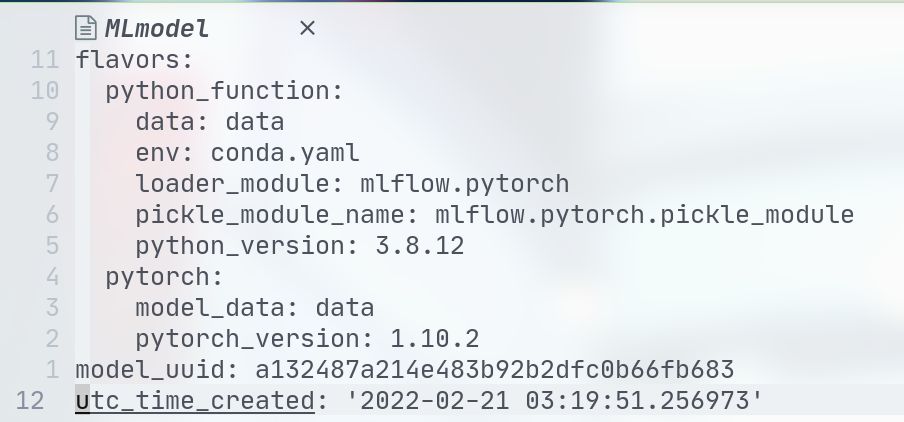

flavor是MLflow Model的关键概念,抽象上是部署工具可以用来理解模型的一种约定。

MLflow定义了其所有内置部署工具都支持的几种标准flavor,比如描述如何将模型作为Python函数运行的python_function flavor。

目录结构示例如下:

MLmode文件内容示例如下:

这个模型可以用于任何支持pytorch或python_function flavor的工具,例如可以使用如下的命令用python_function来 serve 一个有python_function flavor的模型:

|

|

Model Signature

模型的输入输出要么是column-based,要么是tensor-based。

column-basedinputs and outputs can be described as a sequence of (optionally) named columns with type specified as one of theMLflow data type.tensor-basedinputs and outputs can be described as a sequence of (optionally) named tensors with type specified as one of thenumpy data type.

Signature Enforcement

Schema enforcement checks the provided input against the model’s signature and raises an exception if the input is not compatible. It only works when using MLflow model deployment tools or loading models as python_function. It has no impact on native model.

Name Ordering Enforcement

The input names are checked against the model signature. If there are any missing inputs, MLflow will raise an exception. Extra inputs will be ignored. Prioritized method is matching by name if provided in input schema, then according to position.

Input Type Enforcement

For column-based signatures, MLflow will perform safe type conversions if necessary. Only lossless conversions are allowed.

For tensor-based signatures, type checking is strict(any dismatch will throw an exception).

Handling Integers With Missing Values

Integer data with missing values is typically represented as floats in Python.

Best way is to declare integer columns as doubles whenever there can be missing values.

Handling Data and Timestamp

Python has precision built into the type for datatime values.

Datetime precision is ignored for column-based model signature but is enforced for tensor-based signatures.

Log Models with Signatures

Pass signature object as an argument to the appropriate log_model call to include a signature with model. The model signature object can be created by hand or inferred from datasets with valid model inputs and valid model outputs.

Column-based example

The following example demonstrates how to store a model signature for a simple classifier trained on the Iris dataset:

|

|

The same signature can be created explicitly as follows:

|

|

Tensor-based example

|

|

The same signature can be created explicitly as follows:

|

|

Model Input Example

Model inputs can be column-based (i.e DataFrame) or tensor-based (i.e numpy.ndarrays).

A model input example provides an instance of a valid model input which can be stored as separate artifact and is referenced in the MLmodel file.

Log Model with column-based example

An example can be a single record or a batch of records. The sample input can be passed in as a Pandas DataFrame, list or dict. The given example will be converted to a Pandas DataFrame and then serialized to json using the Pandas split-oriented format.

|

|

Log Model with Tensor-based example

An example must be a batch of inputs. The axis 0 is the batch axis by default unless specified otherwise in the model signature. The sample input can be passed in as a numpy ndarray or a dict mapping a string to a numpy array.

|

|

Model API

MLflow includes integrations with several common libraries. For example, mlflow.sklearn contains save_model, log_model, and load_model functions for scikit-learn models.

Additionally, we can use mlflow.models.Model class to create and write models which has 4 key functions:

add_flavorto add a flavor to the model. Eachflavorhas astringname and adictof key-value attributes, where the values can be any object that can be serialized to YAML.saveto save the model to a local directory.logto log the model as an artifact in the current run usingMLflow tracking.loadto load a model from a local directory or from an artifact in a previous run.

Pytorch

mlflow.pytorch module defines utilities for saving and loading MLflow Models with the pytorch flavor.

We can use mlflow.pytorch.save_model() and mlflow.pytorch.log_model() methods to save pytorch models in MLflow format.

We can use mlflow.pytorch.load_mode() to load MLflow Models with pytorch flavor as pytorch model objects. This loaded PyFunc model can be scored with both DataFrame input and numpy array input.